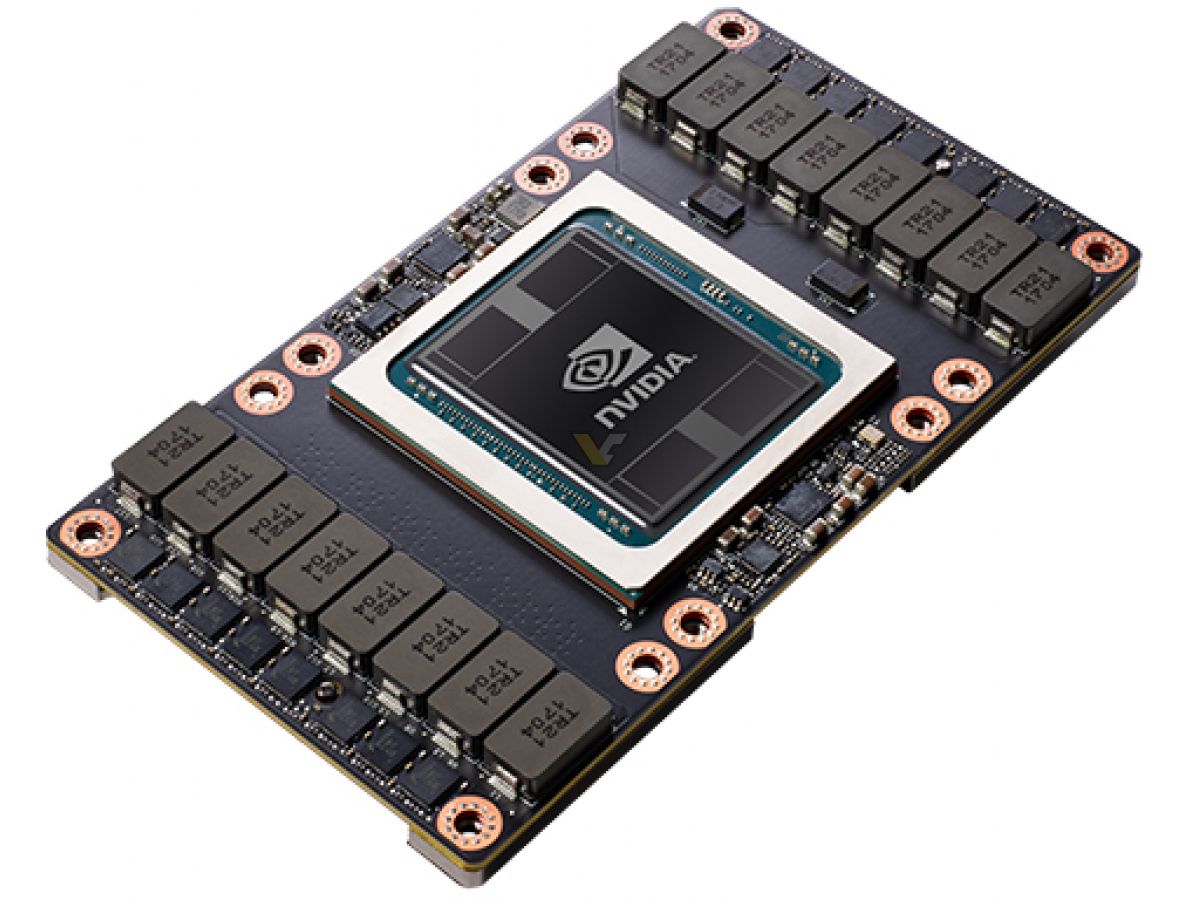

Note that regular M1 also has a second AMX block for its efficiency cores, but that has 1/3 the performance. NVIDIA has paired 40 GB HBM2e memory with the A100 PCIe 40 GB, which are connected using a 5120-bit memory interface. That’s also why the M1 Pro/Max, which has double the power cores of M1, has double the “AMX”. That’s why each block of 4 power cores must simultaneously utilize its AMX block to squeeze out all the power. The AMX is a massively powerful coprocessor that’s too big for any one CPU core to handle. If it can’t utilize the AMX, it won’t run fast.Īpple’s AMX should have the equivalent FP64 processing power to an Nvidia 3000-series GPU. I don’t know what percentage of the linalg.solve algorithm is matrix multiplication and what percentage is matrix factorization. Regarding the linear algebra speedup, AMX exclusively does matrix multiplications. Table 5.8 Nvidia's ampere A100 specifications Nvidia A100 specifications Transistor count Die size 54 billion 826 mm2 FP64 CUDA cores 3456 FP32 CUDA cores. In my application case, torch.matmul and are the most time-consuming part, For example, it seems to say that other math libraries will be chosen instead of Accelerate if they already exist on the system, because they’re “faster” (not). Nvidias latest H100 compute GPU based on the Hopper architecture can consume up to 700W in a bid to deliver up to 60 FP64 Tensor TFLOPS, so it was clear from the start that we were dealing. The basic division that Nvidia is seeing is between HPC workloads, which need lots of FP64 and FP32 math capability and modest memory and memory bandwidth, and AI workloads, which need lots of lower precision math as well as some high precision floating point, and more importantly, a lot more memory capacity and memory bandwidth than the HPC. said that PyTorch should use Accelerate by default, although the documentation does not officially confirm this is true. GTX 1080’s FP16 instruction rate is 1/128 th its FP32 instruction rate, or after you factor in vec2 packing, the. Regardless, if PyTorch uses Accelerate, then it uses the AMX. For their consumer cards, NVIDIA has severely limited FP16 CUDA performance.

I would regard whoever posted that comment with skepticism.

#Nvidia fp64 driver

Tried with: NVIDIA GTX Titan, NVIDIA driver 319.37, CUDA 5.5 and Ubuntu 12.Is AMX already used in pytorch ? I saw you posted a question asking that and also this comment on GitHub. That is it, your CUDA application should now run with full speed FP64 on the GPU. Go to the section with the name of your graphics card > PowerMizer and enable the CUDA - Double precision option. Open the NVIDIA X Server Settings application.

#Nvidia fp64 drivers

To enable full speed FP64 on Linux, make sure you have the latest NVIDIA drivers installed. Enabling full speed FP64 reduces the FP32 performance by a bit since the maximum clock speed needs to be reduced and also increases power consumption since all the power hungry FP64 cores are running. This is done because the primary audience of these consumer cards are gamers. However, by default the card does FP64 at a reduced speed of 1/24 of FP32. For example, the GTX Titan is capable of achieving a double performance that is 1/3 of float performance. Built on the NVIDIA Ada Lovelace GPU architecture, the RTX 6000 combines third-generation RT Cores, fourth-generation Tensor Cores, and next-gen CUDA® cores with 48GB of graphics memory for. In many recent NVIDIA GPUs shipping in graphics cards, the FP64 cores are executed at reduced speed. The NVIDIA RTX 6000 Ada Generation delivers the features, capabilities, and performance to meet the challenges of today’s professional workflows.

#Nvidia fp64 archive

📅 2013-Dec-05 ⬩ ✍️ Ashwin Nanjappa ⬩ 🏷️ double, float, gpu, nvidia ⬩ 📚 Archive

#Nvidia fp64 how to

How to enable full speed FP64 in NVIDIA GPU

0 kommentar(er)

0 kommentar(er)